Client

A growing SaaS platform offering AI-powered image enhancement and transformation tools to global users via a web-based interface.

Project Overview

The client wanted to add AI image processing features (upscaling, enhancement, filters, transformations) without:

-

Locking into a single cloud provider

-

Overpaying for GPUs

-

Interrupting their existing AWS-based backend and frontend

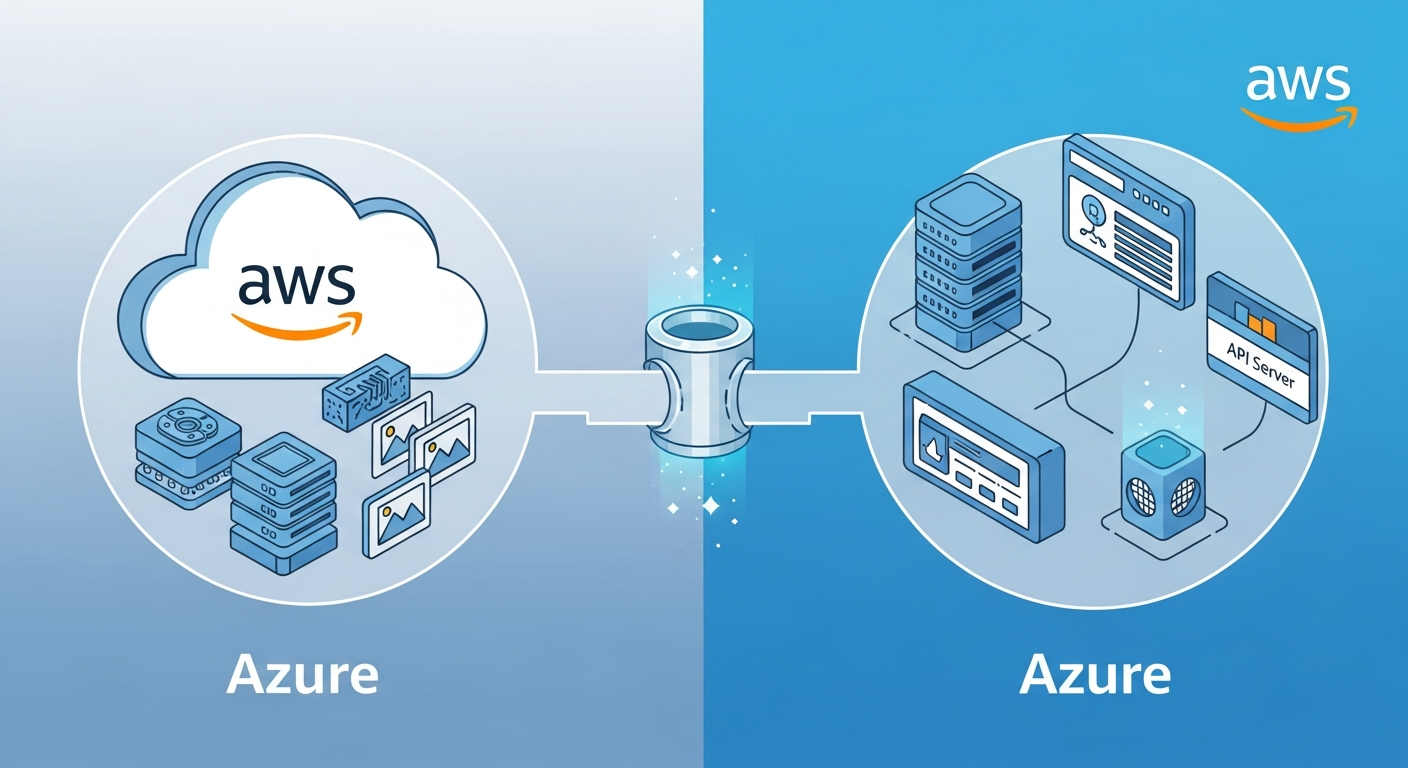

We designed a multi-cloud architecture where:

-

Frontend + main backend APIs run on AWS

-

GPU-optimized image processing runs on Azure

-

Both clouds communicate securely over socket-based connections

-

Users experience a single, seamless app, even though two clouds are working behind the scenes

Key Challenges

1. Existing Stack on AWS

The client was already invested in AWS (EC2, S3, RDS, etc.) and didn’t want a full migration.

2. Need for Cost-Effective GPU Power

Azure GPU instances gave better pricing/availability for their region, but the rest of the system was on AWS.

3. Low-Latency Cross-Cloud Communication

We needed a way for the AWS backend to send image jobs to the Azure GPU server and receive results quickly and reliably.

4. Failure Handling & Monitoring

If Azure or the GPU node had issues, the system still needed to respond gracefully on the AWS side.

Our Solution

1. Multi-Cloud Split: AWS for Web, Azure for Compute

We kept the web layer and core APIs in AWS to avoid disrupting existing architecture:

-

AWS

-

Frontend (React/Next.js, etc.) hosted on AWS (e.g. S3 + CloudFront or EC2)

-

Backend API server on EC2

-

Database (e.g. RDS)

-

-

Azure

-

Dedicated GPU VM (e.g. NV-series) for image processing

-

Optimized runtime for AI models (PyTorch, ONNX, etc.)

-

The AWS backend is the “brain” and user-facing entry point. Azure GPU server is the “muscle” for heavy image tasks.

2. Socket-Based Communication Between AWS & Azure

We implemented a persistent socket connection between:

-

AWS backend service

-

Azure GPU processing service

The flow:

-

User uploads an image via the frontend hosted on AWS.

-

AWS backend receives the request and queues a processing job.

-

AWS backend opens/sends the job over a socket connection to the Azure GPU server.

-

The Azure GPU server processes the image (enhancement, upscaling, filters).

-

Processed image or result URL is sent back over the socket to AWS.

-

AWS returns the response to the frontend and/or stores the output (S3 or similar).

Benefits of sockets:

-

Low-latency, real-time communication

-

Persistent connection (less overhead than repeated HTTP calls)

-

Easier to implement job streaming / progress in the future

3. GPU-Optimized Image Processing Service on Azure

On the Azure GPU server we built a dedicated processing service:

-

Runs AI models for image enhancement/upscaling

-

Exposes a lightweight internal protocol over sockets

-

Uses:

-

GPU scheduling (queue incoming jobs)

-

Batch processing for heavy workloads

-

Proper memory cleanup to avoid GPU leaks

-

We also added:

-

Health checks

-

Job timeout handling

-

Logging of processing time per image

4. Security & Network Setup

To secure cross-cloud communication:

-

Socket traffic restricted via IP whitelisting

-

Firewalls / NSG rules on Azure to allow only the AWS backend IP(s)

-

All communication over TLS-encrypted channels

-

Optional VPN / private tunnel for even tighter security depending on client needs

5. Error Handling & Fallbacks

We implemented robust failure handling:

-

If Azure GPU server is unreachable:

-

AWS returns a graceful error or queues the job for retry

-

-

Clear status codes:

-

QUEUED,PROCESSING,COMPLETED,FAILED

-

-

Logging and alerts for:

-

GPU errors

-

Socket disconnections

-

High latency

-

Architecture Diagram (Text Description)

Results / Impact

⚡ Faster Image Processing

GPU-accelerated Azure server reduced heavy image processing time from 10–15 seconds down to 2–4 seconds, depending on resolution and model.

🌐 No Vendor Lock-In

The client now uses AWS for core platform and Azure for GPU compute, picking the best of both worlds.

💸 Optimized Cloud Costs

By placing only the GPU workloads on Azure and keeping web/backend/responsiveness on AWS:

-

They avoided a full migration

-

Took advantage of better GPU pricing/availability in Azure

-

Optimized costs for both compute and hosting

🧩 Seamless User Experience

Even though two clouds are involved, the user interacts only with one frontend on AWS. All cross-cloud complexity is hidden in the backend.

🛡 Improved Scalability & Flexibility

In the future, they can:

-

Add more GPU nodes on Azure

-

Or even extend to another provider if needed

-

Without touching the main frontend/backend logic

Tools & Technologies Used

-

AWS: EC2, (S3/RDS/CloudFront – if used)

-

Azure: GPU VM (e.g. NV-series), Linux

-

Backend: Node.js / Python (depending on stack), socket-based communication

-

GPU Stack: CUDA, cuDNN, PyTorch / ONNX / other AI frameworks

-

Security: Firewalls, IP whitelisting, TLS, monitoring & logging

Conclusion

By implementing a multi-cloud architecture with AWS + Azure, we delivered:

-

A fast, GPU-powered image processing engine on Azure

-

A stable, familiar backend and frontend on AWS

-

A low-latency socket bridge between them

-

And a platform that is flexible, scalable, and not tied to a single cloud provider

This gives the client technical freedom and better pricing options while keeping a seamless user experience.

Written by

Oliver Thomas

Oliver Thomas is a passionate developer and tech writer. He crafts innovative solutions and shares insightful tech content with clarity and enthusiasm.