Client

A fast-growing platform with increasing API traffic across mobile and web clients. Their existing API struggled with:

-

Slow response times

-

Heavy database load

-

Inefficient query patterns

-

Unpredictable performance during peak traffic

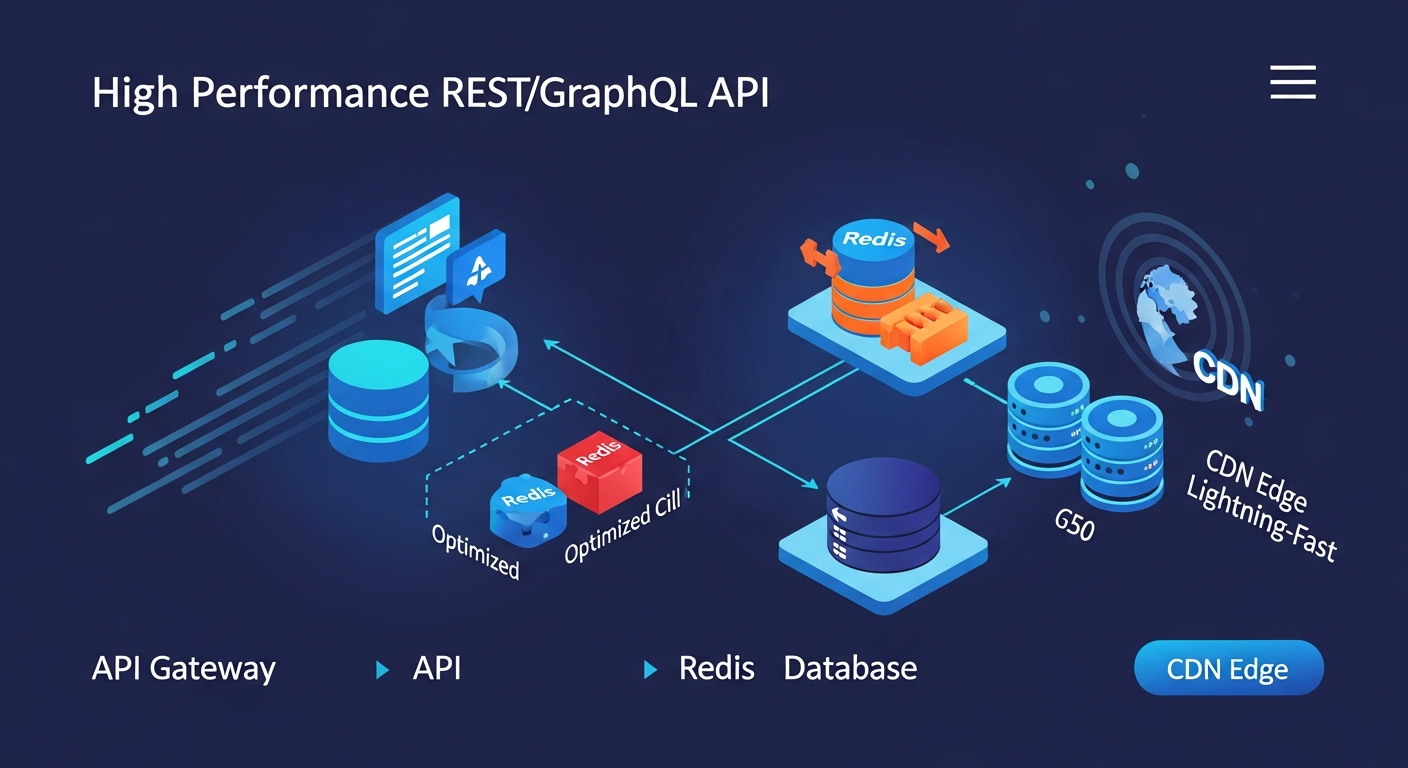

They needed a high-performance REST/GraphQL API that could scale while staying fast.

Project Overview

We redesigned and optimized the client's API layer by:

-

Improving REST and GraphQL resolver performance

-

Optimizing heavy, inefficient database queries

-

Adding Redis for caching hot data

-

Leveraging CDN caching for static/edge responses

-

Adding performance monitoring and metrics

The result:

Massively faster response times and a lighter database workload.

Key Challenges

1. Slow Endpoints

Certain API routes took 800ms to 2s, especially ones with:

-

Joins across multiple tables

-

Non-indexed fields

-

N+1 queries in GraphQL resolvers

2. Heavy Load on Database

The DB was overwhelmed due to repetitive queries from multiple services.

3. No Caching Strategy

Every request hit the database — even static data like categories or public content.

4. High Latency for Global Users

Users far from the server experienced 1–2s added latency.

Our Solution

1. REST & GraphQL API Refactoring

We improved core API architecture:

REST Improvements

-

Batch fetching

-

Reduced payload sizes

-

Query parameter validation

-

Pagination & filtering

-

Async processing for heavy tasks

GraphQL Improvements

-

Avoided N+1 query issues using DataLoader

-

Optimized resolvers for minimal DB calls

-

Field-level caching for expensive fields

-

Preloading associations

This drastically reduced backend computation.

2. Deep Database Query Optimization

We audited DB performance using tools like:

-

pg_stat_statements (Postgres)

-

EXPLAIN ANALYZE

-

Slow query logs

Optimizations included:

-

Creating missing indexes

-

Rewriting slow queries

-

Using materialized views

-

Partitioning large tables

-

Normalizing heavy data structures

Result:

Queries that took 1.2 seconds → now execute in 40–80ms.

3. Redis Caching Layer

We introduced Redis for:

✔ Hot Data Caching

-

User profiles

-

Product lists

-

Category trees

-

Frequently accessed dashboards

✔ API Response Caching

Storing complete REST/GraphQL responses.

✔ Expiry Policies

-

TTL for live data

-

Invalidation triggers for updates

✔ Session Caching (Optional)

Result:

Database load reduced 35–60% during peak time.

4. CDN Caching for Global Performance

We added CDN caching (Cloudflare/AWS CloudFront):

-

Static JSON

-

Public GET endpoints

-

Common GraphQL responses for non-authenticated users

-

Edge caching based on URL & query params

Global users now get responses in 50–150ms.

5. Background Jobs for Heavy Workflows

Tasks like:

-

Analytics updates

-

Report generation

-

Notification sending

were moved to:

-

Worker queues

-

Background jobs

-

Asynchronous processing

Reducing pressure on the main API.

6. Performance Monitoring & Metrics

We implemented:

-

Prometheus + Grafana dashboards

-

Query-level tracing

-

API latency monitoring

-

Redis hit/miss ratio tracking

Provided full visibility into performance over time.

Architecture Diagram (Text Version)

Results & Impact

⚡ Response Time Reduced by 70–90%

Heavy endpoints dropped from 1–2 seconds → 100–200ms.

💾 Database Load Reduced by 40–60%

Redis + query optimization dramatically reduced DB calls.

🌍 Global Users Experienced Faster APIs

CDN-level caching improved latency across continents.

🔒 Stable API Under High Traffic

System now stable during:

-

Sales events

-

Feature launches

-

Traffic spikes

🚀 Better Developer Experience

Clean resolvers, optimized queries, and caching reduced debugging overhead.

Conclusion

By optimizing database queries, improving REST/GraphQL design, and adding Redis + CDN caching, we built a high-performance API capable of handling heavy traffic with minimal latency and reduced infrastructure load.

The platform is now:

-

Faster

-

More scalable

-

More efficient

-

Better suited for global growth

Written by

Oliver Thomas

Oliver Thomas is a passionate developer and tech writer. He crafts innovative solutions and shares insightful tech content with clarity and enthusiasm.