Client

A technology company running 10+ microservices across several virtual machines. Each service had:

-

Separate environments

-

Different dependencies

-

Inconsistent OS settings

-

Frequent deployment issues

-

Long onboarding time for new developers

They wanted to modernize their deployment strategy and create a containerized, scalable microservice ecosystem.

Project Overview

The goal was to migrate the platform from:

❌ VM-based hosting

➡️ to

✔ Dockerized microservices with unified environments

✔ Optional orchestration via Docker Compose or Kubernetes

The new setup needed to:

-

Reduce setup time

-

Improve deployment speed

-

Simplify service scaling

-

Remove dependency conflicts

-

Provide a standardized environment

-

Work on cloud (AWS, Azure, GCP) or on-prem hosting

Key Challenges

1. Services on Different VMs with Different Stacks

Each VM had different:

-

OS versions

-

JDK versions

-

System libraries

-

Environment variables

Hard to debug. Hard to replicate.

2. Deployment Was Slow & Error-Prone

A deployment required:

-

Updating dependencies manually

-

Restarting services manually

-

Fixing OS-level mismatches

-

Long rollbacks

Downtime risk was high.

3. Developers Needed Days to Set Up Environment

New engineers spent 2–4 days configuring:

-

Tools

-

Dependencies

-

Database connections

-

Service configs

4. Scaling Was Manual

To scale a service:

-

A new VM had to be provisioned

-

Dependencies installed manually

-

Reverse proxy updated manually

Not efficient — especially during traffic spikes.

Our Solution

1. Containerizing Every Microservice

We created Dockerfiles for each service:

-

Java/Spring Boot services

-

Node.js services

-

Python services

-

Redis / Postgres / Nginx / Worker services

Optimizations included:

-

Multi-stage builds

-

Minimal base images

-

Reproducible builds

-

Environment variables via

.envor secrets manager

Result:

Every service now runs consistently, anywhere.

2. Replacing VM Deployments with Docker Compose

For environments without Kubernetes (staging/dev), we built a Docker Compose stack:

Benefits:

-

One command to start entire platform

-

Local environment identical to production

-

Easier debugging & logs

-

No dependency conflicts

3. Kubernetes for Production-Grade Scaling

For environments requiring high scalability, we deployed services to a K8s cluster (EKS, AKS, or GKE):

-

Deployment & StatefulSet configs

-

Horizontal Pod Autoscaling

-

Liveness/Readiness probes

-

ConfigMaps & Secrets

-

Nginx Ingress or Traefik

-

Observability with Prometheus/Grafana

Kubernetes enabled:

-

Automatic scaling

-

Zero-downtime updates

-

Self-healing pods

-

Simplified rollback

4. CI/CD Integration

We integrated Docker builds into CI/CD pipelines:

-

GitHub Actions

-

GitLab CI

-

Jenkins

-

Bitbucket Pipelines

Pipeline included:

-

Build Docker image

-

Run tests

-

Push to registry

-

Deploy to Compose/K8s

This made deployment:

-

Faster

-

Consistent

-

Automated

5. Centralized Reverse Proxy & SSL Termination

Migrated routing from per-VM configs to:

-

Nginx or Traefik in Docker

-

ACME SSL auto-renewal

-

Centralized API gateway

Consistent routing for all environments.

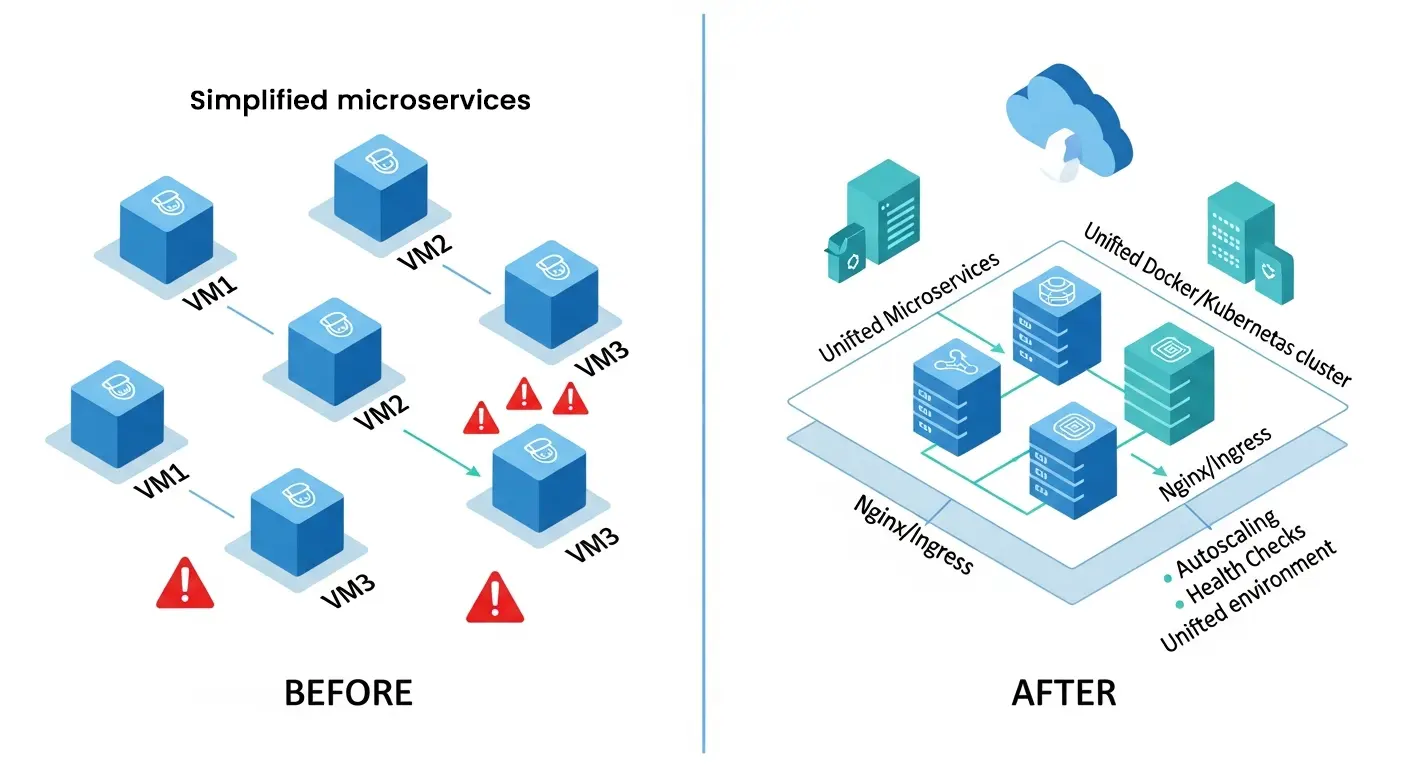

Architecture Before & After

Before (VM Based):

After (Docker / Kubernetes):

Results & Impact

⚡ Onboarding Time Reduced by 80%

From 3 days → 4 hours

Developers now run everything with a single command.

🚀 Deployment Time Reduced by 70%

No manual VM login, no OS conflicts, no setup delays.

📦 Consistent Environments

Same Docker images run across:

-

Dev

-

QA

-

Stage

-

Production

“No more it works on my machine problems.”

🌐 Simplified Scaling

Docker/K8s allowed:

-

Horizontal scaling automatically

-

Adding instances in seconds

-

No manual VM provisioning

🛡 Fewer Errors & Downtime

Monitoring + health checks =

self-healing containers & zero-downtime updates.

💰 Cost Savings

Shutting down multiple VMs → running efficient containers → lower compute cost.

Conclusion

By transforming the client’s VM-based microservice platform into a containerized, Docker-powered architecture, we delivered:

-

Faster onboarding

-

Consistent environments

-

Automated deployment

-

Easy scaling

-

Reduced operational complexity

-

A modern cloud-native foundation

This upgrade makes the client’s platform future-ready and easier to maintain as services grow.

Written by

Oliver Thomas

Oliver Thomas is a passionate developer and tech writer. He crafts innovative solutions and shares insightful tech content with clarity and enthusiasm.